- NetWitness Community

- Blog

- A list two ways - Feeds and Context Hub

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Feeds have been part of the core RSA NetWitness Platform for a long time and form one of the basic logic blocks in the product for capture time enrichment and meta creation. Context-hub lists have been recently added to the RSA NetWitness product and provide a search time list capability to overlay information on top of meta already created to provide additional context to the analyst. At the moment those lists are in separate locations, but with a small amount of effort they can be pointed together so that as a feed is updated the context list is also updated to provide context on events that might have occurred before the feed data was created.

Here are some architecture things to note as this is focused on the RSA NW11.x codebase:

The directory where feeds are read from in RSA NW11.x is different than RSA NW10.6. The idea behind using this directory, which is mentioned below, is to have a data feed pulled from an external source to this local web directory that the native RSA NetWitness feed wizard and the native Context Hub wizard can both pull from to create information.

This is the new location of the directory where feeds can be placed so that the feed wizard can read from the local RSA NW Head Server to get access. Note: you might need to do this if your data requires pre-processing to remove stray commas or remove/add columns of data.

Your csv feed file will go here or a directory here:

NW Head Server (node0)

/var/lib/netwitness/common/repo

You will also need to make a slight modification to your /etc/hosts file on the head server so that the Context Hub can read this location. This is because we force https connections now and this helps get past the certification checking process.

Host File(node0)

/etc/hosts

127.0.0.1 netwitness

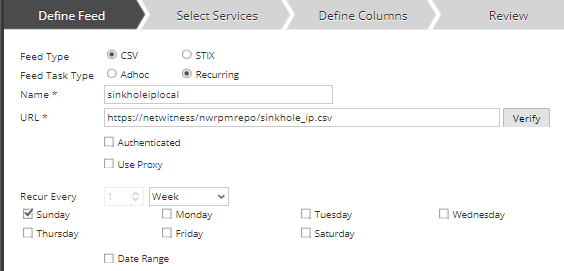

Create your feed as usual but use the recurring feed option (even if the feed doesn't get updated it provides us the URL option)

Next, set the url to be https://netwitness/nwrpmrepo/<feed_name.csv>

(netwitness is used here to point to localhost via the host file change we made above. Use whatever you added to your /etc/hosts file)

Next, set the other items as normal, apply the feed to your decoder service group and select the columns as you would for a normal feed and select apply.

Now you have a capture time feed ready to go as you normally would.

On to the Context-Hub

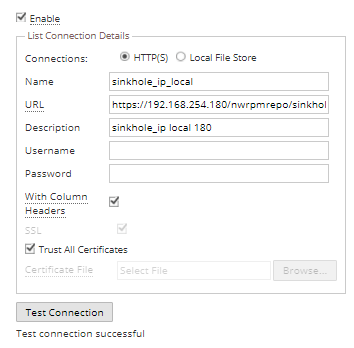

- RIght click on the context-hub service > configure

- Create your list > enable it

- Set your connection to http(s)

- URL: https://<nw11headip>/rwrpmrepo/<feed_name.csv> (keep in mind here that the Context hub is on ESA so the address needs to be the NW head server IP and not localhost)

- Description:

- Enable with headers if you have #headers for the columns in your feed

I chose to select overwrite the list and not append values to keep the list fresh with just what is needed from the source.

I then selected the column to match as IP.

*** one thing to note, if you use headers to remind yourself what data in the column goes into what metakey make sure you do not use PERIODS. In the feed wizard this doesn't matter, but in context hub this data is stored in Mongo and PERIODS have a different meaning, so replace your dots with _ in the headers. ***

for instance

#alias.ip

should be

#alias_ip

The first option will error, the second is good to go.

The Reveal

Now once the feeds and context are setup you can look for hits on the feed and the additional meta created from it, and then see the grey meta overlay for context which you can open with the context fly out panel.

Based on our configured feed we should have an entry in the IOC key for (ioc contains 'sinkhole').

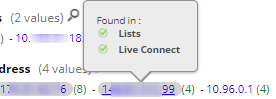

Next, you can look for the ip addresses to see if there are grey indicators: Right click on Live Connect Lookup which opens the right panel

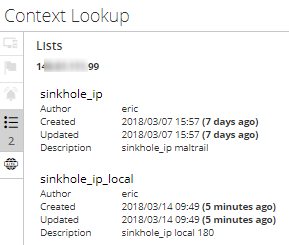

Now you can see the lists that this IP lives in

You can see the lists that match (in this case 2 lists)

This will also work if an IP was investigated before the Feed was created as the context-hub is search time not index time overlay.

Now as the feed is updated both areas get updated together.

If you would like to perform these steps but are uncomfortable doing so yourself, please contact your account manger who can help you get a very affordable Professional Services engagement to assist. Otherwise, good luck and happy hunting!

- Eric

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Using NetWitness to Detect Phishing reCAPTCHA Campaign

- Netwitness Platform Integration with Amazon Elastic Kubernetes Service

- Netwitness Platform Integration with MS Azure Sentinel Incidents

- Netwitness Platform Integration with AWS Application Load Balancer Access logs

- The Sky Is Crying: The Wake of the 19 JUL 2024 CrowdStrike Content Update for Microsoft Windows and ...

- The Sky Is Crying: The Wake of the 19 JUL 2024 CrowdStrike Content Update for Microsoft Windows and ...

- New HotFix: Addresses Kernel Panic After Upgrading to 12.4.1

- Automation with NetWitness: Core and NetWitness APIs

- HYDRA Brute Force

- DDoS using BotNet Use Case

-

Announcements

64 -

Events

12 -

Features

12 -

Integrations

15 -

Resources

68 -

Tutorials

32 -

Use Cases

31 -

Videos

119