- NetWitness Community

- Blog

- HTTPS Insecure Cipher Detection

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- HTTPS Insecure Cipher Detection

- Overview

- Option 1: ADHOC

- Option 2: Recurring (automatic) Feed

- Investigating Feed Tags

- Review

HTTPS Insecure Cipher Detection

Overview

In the post-pandemic era, there's a noticeable surge in encrypted traffic across various channels, such as web, API calls, and cloud interactions, predominantly utilizing HTTPS. However, the mere presence of encryption doesn't guarantee its robustness. Encrypted communications often grapple with obsolete, vulnerable, or precarious ciphers. As the volume of encrypted traffic rises, so does the scrutiny through audits assessing the effectiveness of communication encryption. Recently, a leading global bank presented us with this challenge, prompting the creation of this blog to elucidate the process of identifying and detecting weak or risky ciphers using NetWitness.

For this use case, we will be pulling data from https://ciphersuite.info/ which scrapes it's information from https://www.iana.org/assignments/tls-parameters/tls-parameters.xml#tls-parameters-4. The nice thing that ciphersuite does for us is allow for easy access to the data in JSON format via a simple API. This in turn makes it very simple and easy for us to collect that information and ingest it into NetWitness for real-time tagging and alerting.

Regardless of which option below best suits your need, the script used to generate the CSV in this blog is available here:

#!/usr/bin/python3

import json

import requests

import csv

'''

Date: 2-9-24

Author: Cody Spooner

Purpose: Currate list of ciphers with associated risk from ciphersuite.info. The list is to be used for real-time tagging and/or alerting within the NetWitness platform.

'''

def get_data(): #get JSON data from API

data_dict = {} #create empty dictionary

r = requests.get("https://ciphersuite.info/api/cs/") #call ciphersuite API

data = r.json() #read returned JSON data

for item in data['ciphersuites']: #populate dictionary with returned values with keys as gnutls names to matcher cipher meta key

for key, value in item.items():

data_dict[key] = value

return data_dict #return dictionary to be called

#fields in dictionary values, for reference when tweaking this scrip

'''{'gnutls_name': 'TLS_SRP_SHA_AES_128_CBC_SHA1', 'openssl_name': 'SRP-AES-128-CBC-SHA', 'hex_byte_1': '0xC0', 'hex_byte_2': '0x1D', 'protocol_version': 'TLS', 'kex_algorithm': 'SRP', 'auth_algorithm': 'SHA', 'enc_algorithm': 'AES 128 CBC', 'hash_algorithm': 'SHA', 'security': 'weak', 'tls_version': ['TLS1.0', 'TLS1.1', 'TLS1.2', 'TLS1.3']}`'''

def make_csv(data_dict): #create a CSV from JSON data

feed = open('cipher_feed.csv','w', newline='') #open new file to be written

csv_writer = csv.writer(feed, lineterminator='\n')

for key, value in data_dict.items():

csv_writer.writerow([key,value['security'],value['openssl_name']]) #write strcutured CSV rows

if __name__ == "__main__": #main function

data_dict = get_data()

make_csv(data_dict)

Option 1: ADHOC

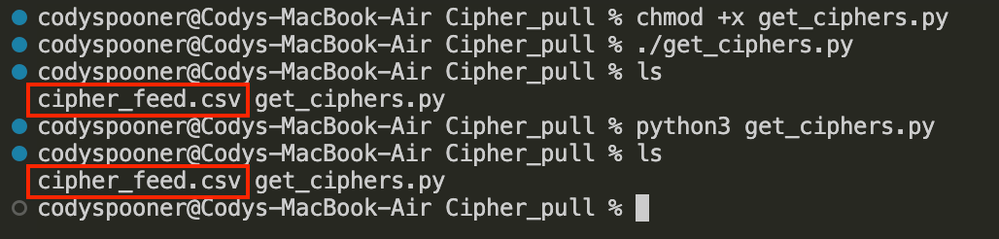

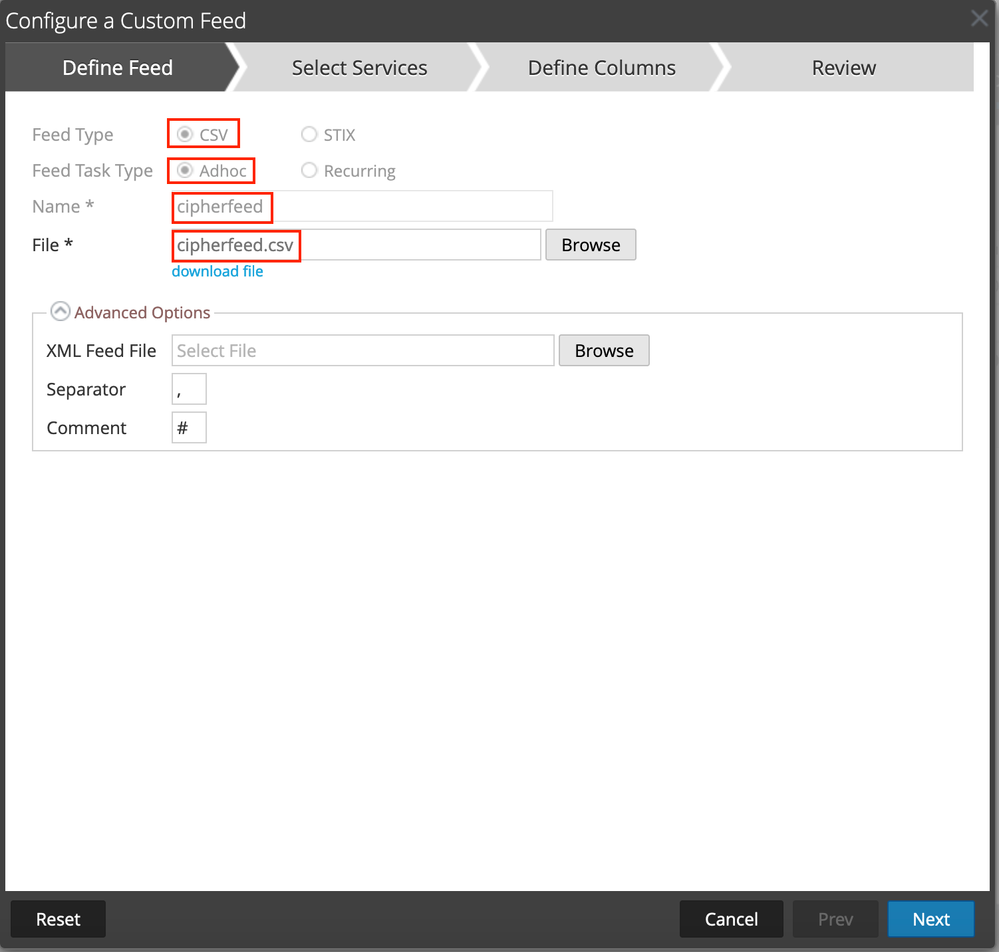

The one-off/manual curation of data is the easiest level of effort upfront. Simply execute the script above, and we'll walk through adding the generated CSV to the NetWitness Custom Feeds section in the UI.

Depending on your operating system (OS) you may need to make the script executable before being able to simply run it. Alternatively, you can run the script via python3 without needing to add the execution (+x) permission.

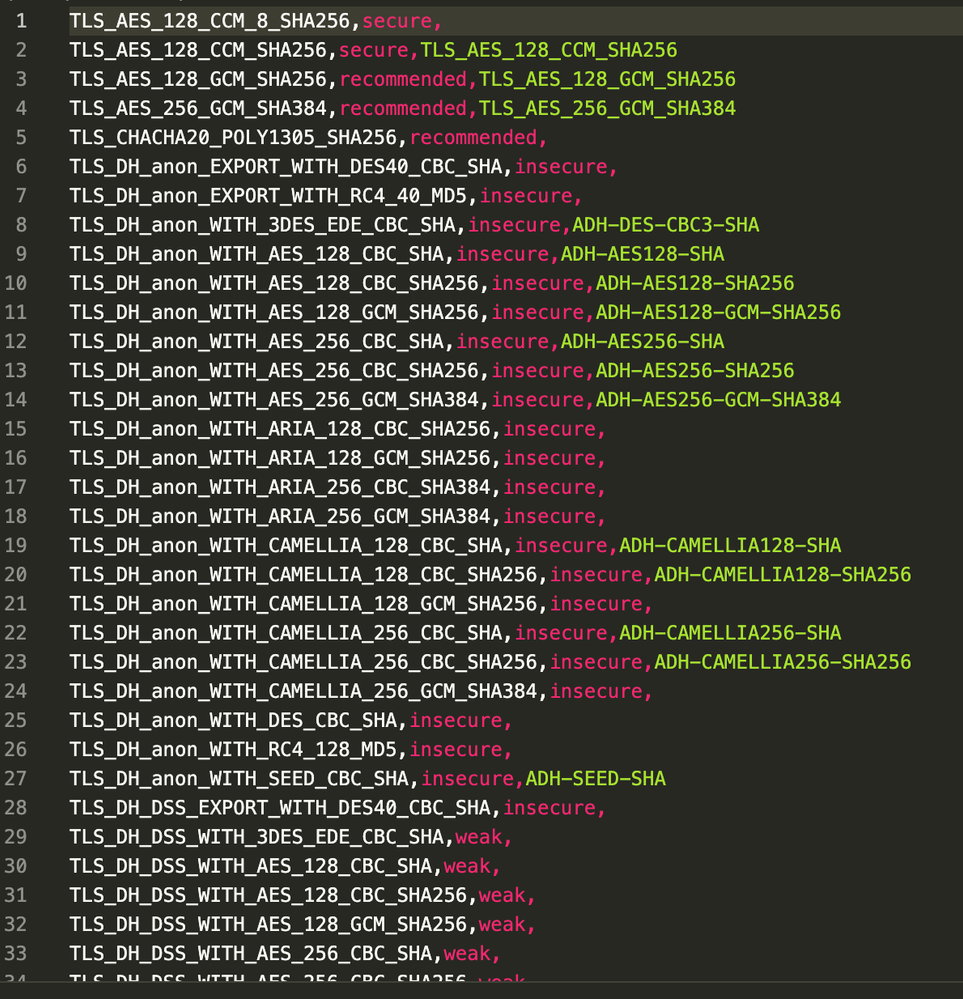

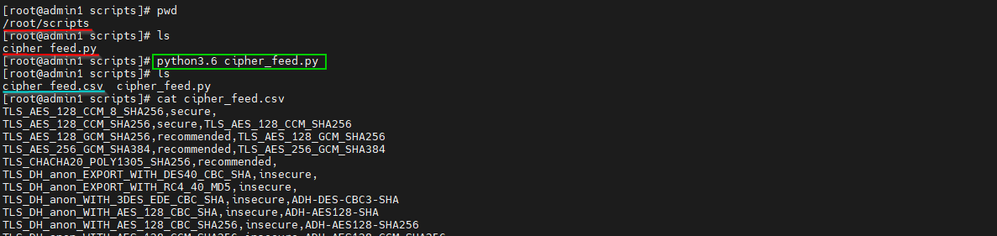

The generated CSV should like the below image after successful script execution.

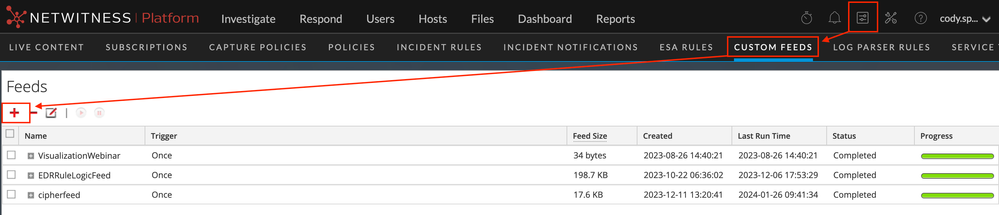

Once the CSV is created, simply login in to the UI and head over to the Custom Feeds configuration page (Configure -> Custom Feeds).

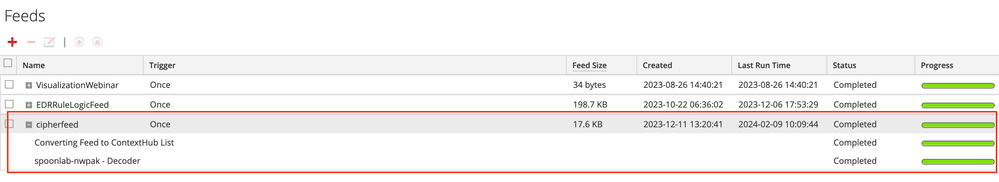

Option 2: Recurring (automatic) Feed

In this section, we will walk through automating the process outlined in the section above (Option 1: ADHOC). Automating the feed curation relieves workload from the administrators of the platform as well as ensures that both analysts/hunters and any detection logic have the most up-to-date information. One really useful aspect of the admin server is that it has the capability to read it's own repo directory via a recurring feed configuration. We will leverage the python script (available towards the beginning of this post), a couple directory modifications, and a couple cronjobs to automate the generation and serving of the CSV file.

First, I highly recommend reviewing the following blog post as we'll be using sections of it for this post: A list two ways - Feeds and Context Hub - NetWitness Community - 518883

Second, we'll want to start by transferring the python script to the admin server. While I used SCP to transfer the file, you can use whatever method is allowed by your organization. Both SCP and SFTP work over SSH which is is open by default on the admin server appliance. I also recommend creating a dedicated 'scripts' directory. If you've had a previous engagement with our professional services team, there may already be a scripts directory in /var/netwitness/ or /root/. This is not necessary but it helps keep things organized as you do more fun stuff like this in the future. For the purposes of this blog, I moved the script to /root/scripts in my lab as shown below.

By default, NetWitness packages Python 3.6. Since there are no external libraries in this script, it should run as expected as soon as it is moved over to the admin server. You can run it manually to verify now and we will use the generated CSV in a later step.

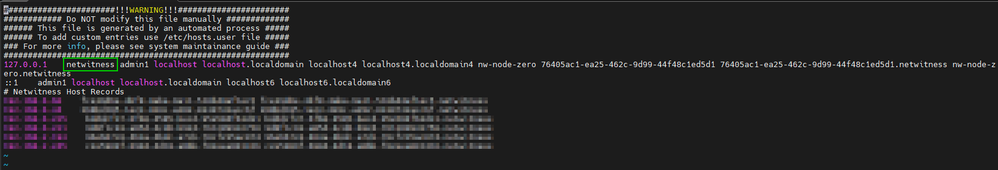

Next, to avoid a certificate check on the local repo, we'll need to modify the /etc/hosts file (you can use 'vi' or 'nano'. You can add whatever string you want as an identifier but for the purposes of this blog, I added the string "netwitness" to the beginning of the 127.0.0.1 line as shown below.

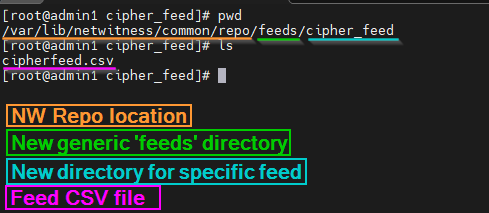

Once that is done, we can move on to creating a couple directories and moving our CSV file to them. Please use the 'cd' command to move to /var/lib/netwitness/common/repo .

I created a 'feeds' and 'cipher_feed' directory to better organize any future additions as shown below.

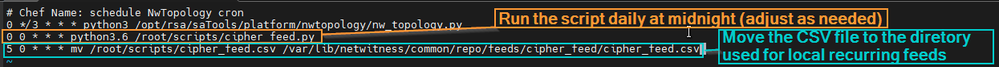

Once that is complete we will likely want to automate this as well. That is where a couple cron jobs come in handy. First, we'll set the script to execute at midnight (00:00). Then, we'll set a cron to move the file to the directory referenced in the image above at five minutes past midnight (00:05). Please adjust these as you see fit. You can create new cron jobs via the 'crontab -e' command as shown below.

To ensure that the changes are applied, you can execute the following command: 'systemctl restart crond.service'. This is it for the backend work. At this point, we can pivot back to the UI and head to the Custom Feeds section.

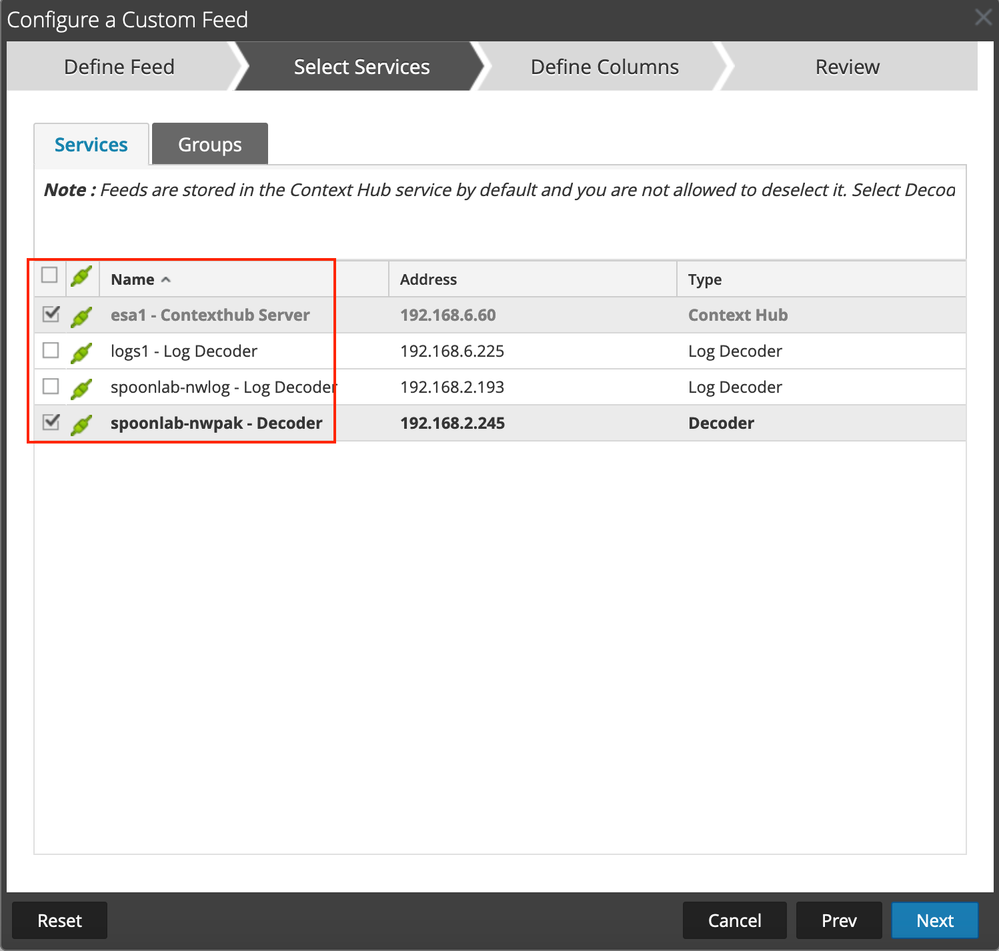

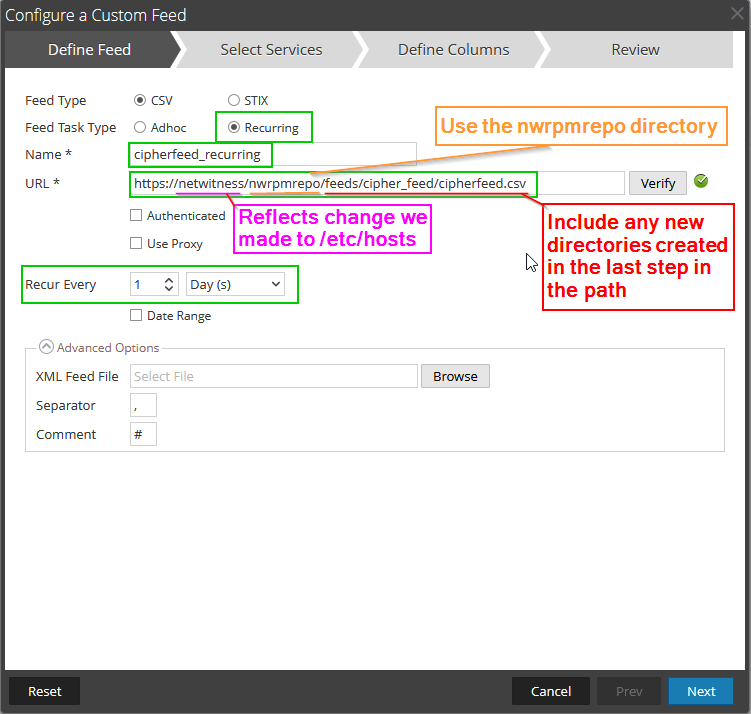

Just like in the previous option, we'll start by creating a new custom feed and assigned services for the feed to be deployed to. Instead of selecting the ADHOC option, we'll want to select 'recurring'.

You can adjust the occurrence as needed. Just keep in mind the cron jobs that were created in the previous step as you'll want those to align. The URL will use the value we added to the /etc/hosts file in a previous step (Ex. netwitness) and be followed by "/nwrpmrepo/path/to/CSV/here/". "nwrpmrepo" replaces the actual file path ("/var/lib/netwitness/common/repo/") that we observed in a previous step. Since we added custom directories to that path, we need to add those to the URL still as shown in the image above. Once that is done, you can click "verify" to ensure that the file can be read as expected. After all the configuration changes above are set, you can click "Next" to move onto the feed indexing configuration. This portion will match the same configurations as Option 1.

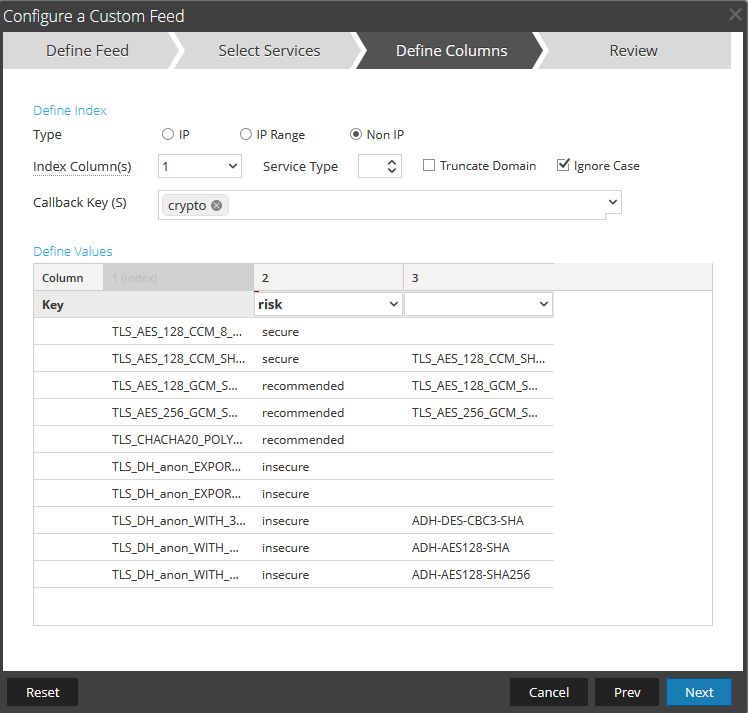

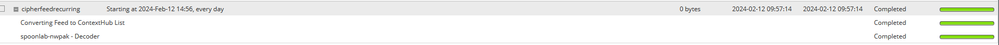

Once the configuration is completed as shown above, you can click "Next" and review the feed before deploying. Once you are happy with it, you can deploy the feed and you should be greeted with a success message.

Investigating Feed Tags

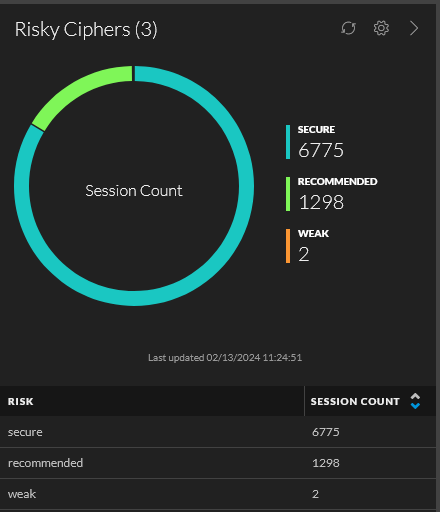

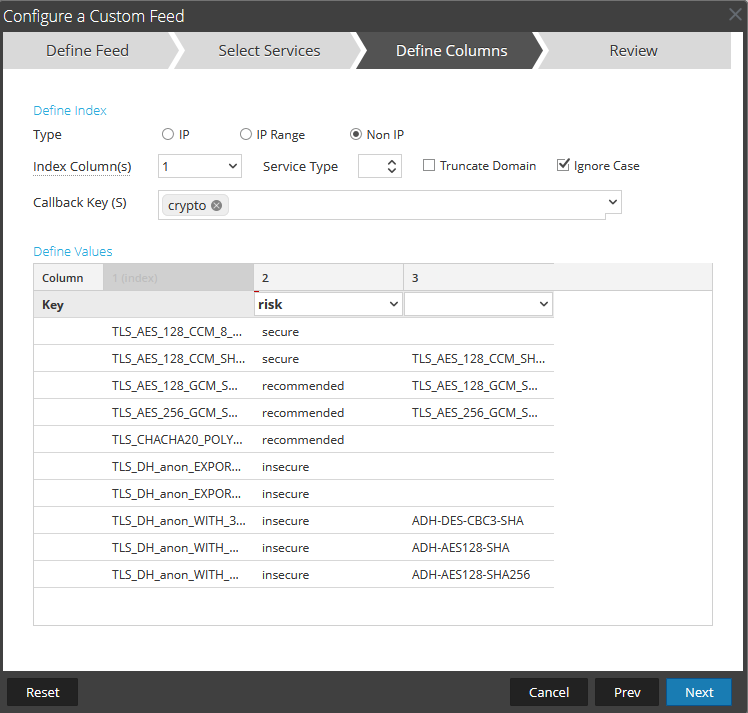

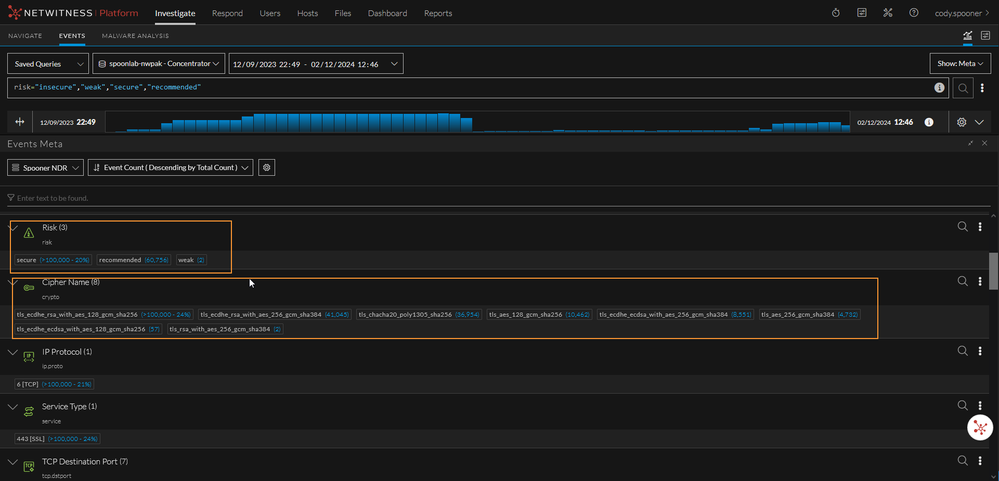

Now that we're through the setup (manual or automated), we can explore how to leverage the real-time tagging capabilities within NetWitness. When a match for the cipher is found within the "crypto" meta key, the associated risk tag is populated into the "risk" meta key. For example, if the cipher TLS_AES_128_CCM_8_SHA256 was observed during the real-time parsing, the associated value "secure" would parse into the "risk" meta key and make it available for both investigative and detection purposes.

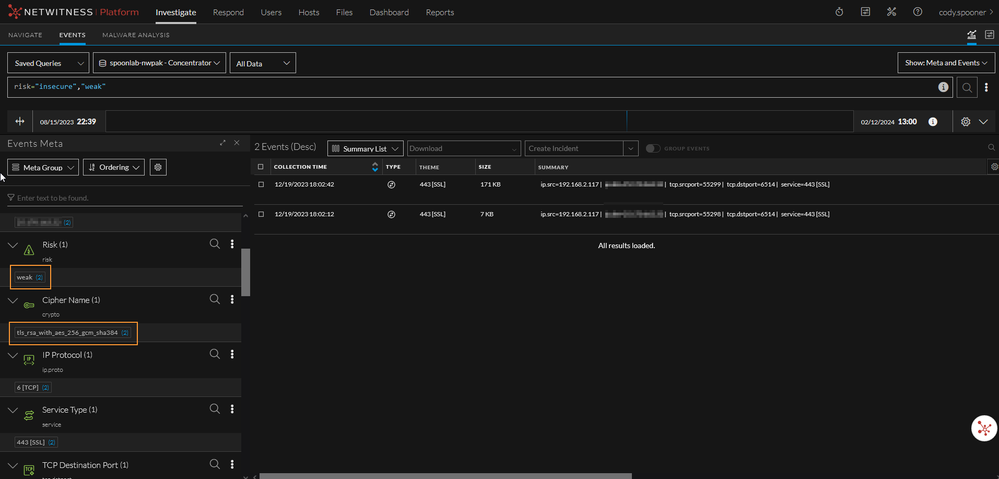

First, let's walk through how to hunt or data-carve matches to the feed inside the Investigate module. You can start with a query such as:

risk="insecure","weak","secure","recommended"

This will return all data within the given time range that has matched a cipher listed in the feed.

Let's say you only wanted to look for "weak" or "insecure" ciphers. We can adjust our query accordingly.

The results will vary in your environment but an example of that query modification is below.

risk="insecure","weak"

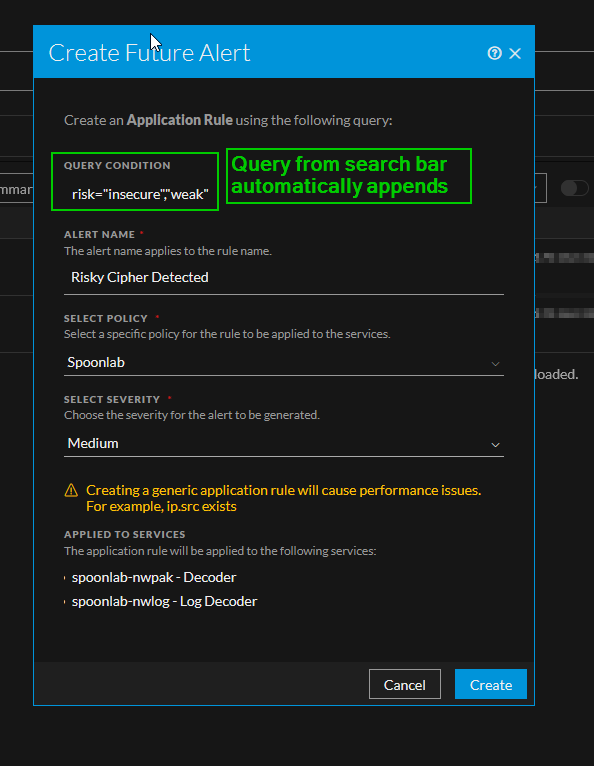

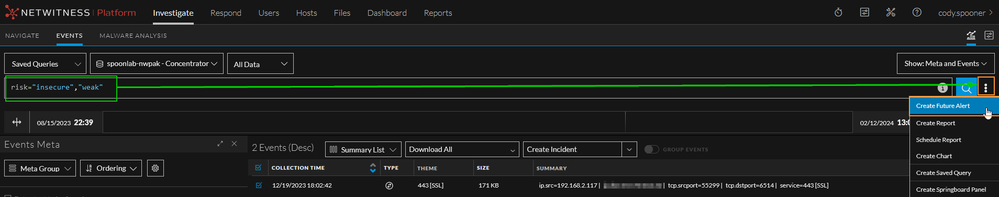

Now, we can go one step further and translate our query to detection logic. In NetWitness version 12.3.0+ we introduced an exciting new feature that allows hunters and analysts to translate their queries into detection logic with a simple button click. This REQUIRES the new Centralized Content Management (CCM) feature to be configured. More information can be found here: https://community.netwitness.com/t5/netwitness-platform-online/centralized-content-management-guide-for-12-3-1/ta-p/705084

Start by clicking on the three-dots all the way to the right hand side of the search bar and select the "Create Future Alert" button. You will be greeted with an alert configuration page.

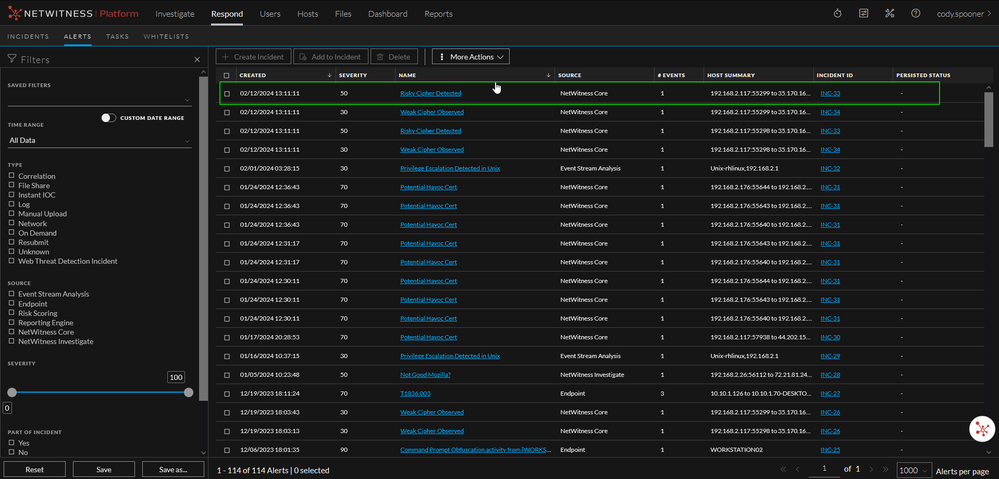

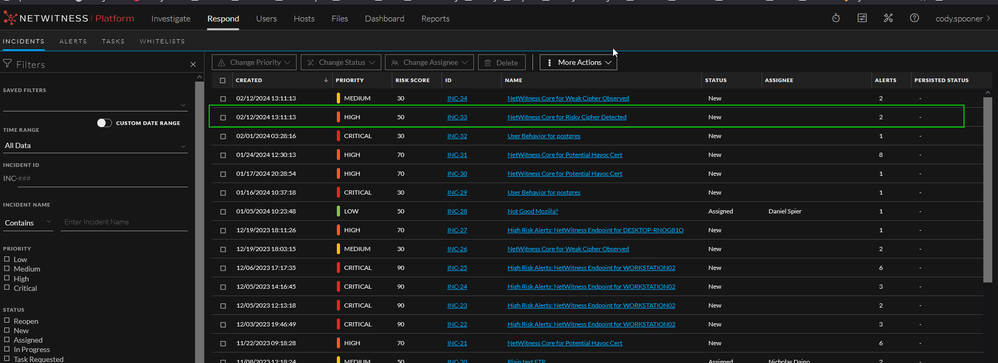

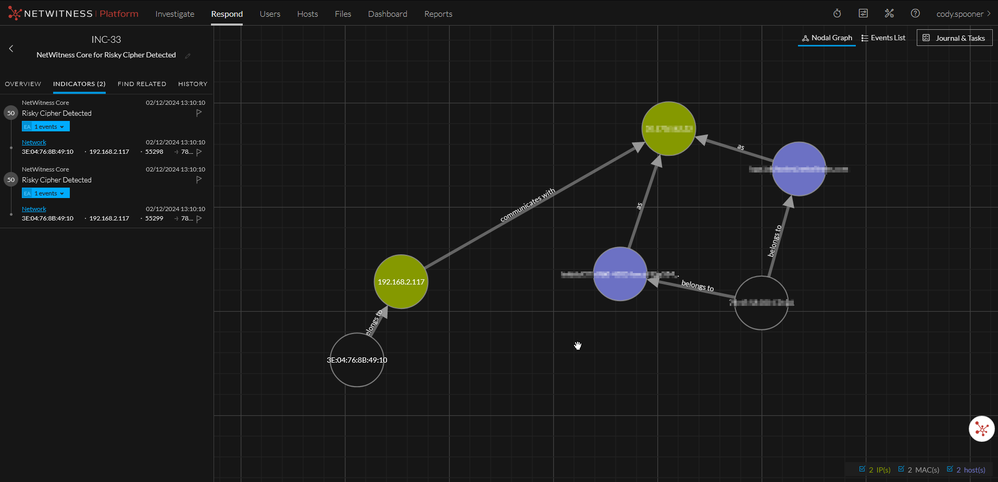

Once a match is triggered, an alert will be generated in the Respond module and turned into an Incident. Another new feature available in NetWitness version 12.3.0+ is the ability to forward incidents to a SIEM over syslog or setup email notifications. Both the alert and associated incident are shown below.

Review

In review, identifying weak/insecure ciphers is growing in necessity for organizations across the globe. The process for tagging and alerting on such ciphers is trivial within the NetWitness platform. Throughout this blog, we walked through creating an ADHOC feed, automating feed curation, and leveraging the generated tags for valuable insight for security teams. This insight can be in the form of proactive hunting or reactive detection logic.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Using NetWitness to Detect Phishing reCAPTCHA Campaign

- Netwitness Platform Integration with Amazon Elastic Kubernetes Service

- Netwitness Platform Integration with MS Azure Sentinel Incidents

- Netwitness Platform Integration with AWS Application Load Balancer Access logs

- The Sky Is Crying: The Wake of the 19 JUL 2024 CrowdStrike Content Update for Microsoft Windows and ...

- The Sky Is Crying: The Wake of the 19 JUL 2024 CrowdStrike Content Update for Microsoft Windows and ...

- New HotFix: Addresses Kernel Panic After Upgrading to 12.4.1

- Automation with NetWitness: Core and NetWitness APIs

- HYDRA Brute Force

- DDoS using BotNet Use Case

-

Announcements

64 -

Events

12 -

Features

12 -

Integrations

15 -

Resources

68 -

Tutorials

32 -

Use Cases

31 -

Videos

119