- NetWitness Community

- Discussions

- Detailed example: how to extract pcap for any query and extract meta values for any sessions using R...

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Detailed example: how to extract pcap for any query and extract meta values for any sessions using REST SDK API

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2012-12-13 03:36 PM

During Forensic investigation using RSA/NetWitness system, one often need to save raw packet data and meta values from particular interested sessions into pcap or xml/JSON files before the captured data is rolled out from RSA/NetWitness databases.

Broker/concentrator example:

- Need extract raw packets into a pcap file from sessions with ip.src=10.194.238.251 and alias.host=time.vocalocity.com between 12/6/2012 8am - 9am.

- Need to save all meta values from these sessions into xml or JSON file.

Decoder example:

- Need to extract raw packets into a pcap file from sessions between 12/10/2012 8:00am - 8:01am

- Need to save all meta values from these sessions into xml or JSON file.

Attached pdf provides detailed steps and screen outputs on how above tasks are done on a broker/concentrator and a decoder.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-09-27 03:31 PM

John-- This is excellent stuff and almost exactly fits something I'm trying to do. I'm working to integrate NW with our SIEM, and one of the use cases I'm doing right now is getting a pcap for a specific IP that generated an alert in the SIEM, for a window of time around that alert. I can easily see how to grab all the session ids and then fetch the packets. But one of the things I'm wondering is if it's possible just to store that pcap or those sessions on NW instead of pulling them down. I know they're being stored temporarily, but I'm trying to find a way to tell NW to hold them all permanently with some identifying information like the alert number. Is that possible? Thanks in advance for any help you can give!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-09-27 03:56 PM

FYI, starting with 10.4, you can bypass the query and just pass a where clause to the packets command. It will do the query for you on the backend and send back only the packets that match the query:

http://<hostname and port>/sdk/packets?where=ip.src%3D10.194.238.251

Of course, the where clause can be as complicated as any regular query. This also works with the new 10.4 content search capability "msearch", which let's you search words (or regex) in packets or logs and returns the hits. This command returns the same results as the older /sdk search command, but works over many sessions or a query to provide low latency. It can search over raw packets/logs or over the list of meta for each session or both (see flags).

Here's are the parameters used by the command:

msearch: Search for pattern matches in many sessions or packets

security.roles: sdk.content & sdk.meta

parameters:

sessions - <string, optional> The session ID ranges to search

packets - <string, optional> The packet ID ranges to search

search - <string> The search string to use

where - <string, optional> The where clause used to identify sessions to consider for the search

limit - <uint64, optional> The maximum number of sessions to traverse for this search

flags - <string, optional> Flags to use for search. This is a comma separated list of one or more of the flag values regex, sp, sm, ci, pre, post, ds, precache

Here's what the flags mean:

1) regex means the search parameter is a regular expression. Otherwise it is treated as a normal search string

2) sp means search packets or logs. Raw data search.

3) sm means search meta

4) ci means do the search case insensitive

5) pre means return data prior (characters before) the search hit. In other words, provides context to the data found

5) post means return data after (characters after) the search hit. Used for displaying context to the search hit

6) ds means decode session. So, on a packet session, if you were searching an email, all base64 attachments would be decoded to regular files before being searched. This works over all service types that we have native decoding support (pop, smtp, http, smb, ftp, etc).

7) precache means it will attempt to speed up the content search by making periodic precache calls to upstream decoders before calling msearch on them. Generally speaking, there's no harm in always passing this flag and when it works well, it can greatly speed up the searches.

The search string can be a regex (depends on what's passed in flags) or it can be a simple text search. The text search provides these capabilities:

1) Each whitespace delimited word is ANDed, so everything must match. For instance search="glazed donut", both glazed and donut must be found in the session (they don't have to be together or in any specific order).

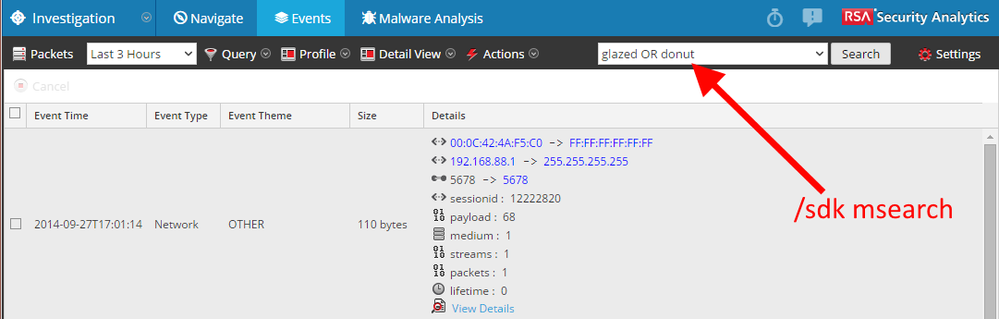

2) The word OR is special, so search="glazed OR donut" means either glazed or donut must be found in the session to match, both are not required. OR must be capitalized. "or" will just look for the word or.

3) You can mix or match implicit ands and ORs together in the search string. The explicit OR has higher precedence than then implicit (whitespace) AND demonstrated as follows:

'cheese toast OR bread dumplings' is the same is the logical statement 'cheese AND (toast OR bread) AND dumplings'

This would require that both the terms 'cheese' and 'dumplings be present in a match and one of 'toast' or 'bread'

4) Words can be exclude from search results using the '-' operator:

'cheese -toast'

This would return any result that has the word 'cheese' unless the word 'toast' is also present.

5) Words will match any exactly matching substring within a session's content (e.g. 'each' is found in 'beach').

The results are streamed back in real time as they are found. Over the REST interface, this will be via chunked-encoding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-09-27 04:08 PM

BTW, msearch is the backend capability exposed as the new SA 10.4 event search:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-09-28 01:58 PM

That's excellent! Thanks for the great information and fast reply, Scott. One last thing if I you don't mind helping me a bit more-- when I request the sessions or packets using that API, do I have to fetch them all and pull them back to the host making the request? Or is there a way to ask NW to simply package them up and store them for me?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-09-28 05:35 PM

The packets command is a streaming API, it reads the packets from the packets database on the decoder and sends them to the host. They are already stored on the Decoder, in the packet database, so storing them twice would consume additional disk space already devoted to the full packet db.

The intent of the command is to provide packets for additional processing outside the Decoder. In a continuously capturing Decoder, the databases are FIFO and there isn't a way to mark specific sessions to keep around forever. Of course, if you have the space elsewhere, you can save those pcaps however you want.