- NetWitness Community

- Discussions

- How to Validate Your Tap When Deploying a New Decoder

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to Validate Your Tap When Deploying a New Decoder

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2012-12-12 04:55 PM

Just ran into this scenario in the field and wanted to share. A large customer is bringing on a tapping infrastructure at a new location. On that tap will hang their usual mix of infrastructure- an IDS, Decoder and a FireEye box. They were using a new tapping appliance, had new contractors setting everything up and the network traffic at this location was expected to be markedly different than other locations. Their question to me was, "How am I sure that this contractor set up the tap correctly?" Using a Decoder and NetWitness Investigator, this is actually a quickly answered question.

First, setup your decoder correctly. This includes giving it a proper name, setting NTP and installing NWLive content, custom parsers and custom Index files before you ever hit the capture button. Once content is deployed, you should add the below application "TimedOut Rules" to your decoder to look for likely timed out traffic that is being denied by a firewall. Those rules are:

name="Possible Timed Out Web Traffic" rule="tcp.srcport = 80" order=172 alert=alert type=application

name="Possible Timed Out SSL Traffic" rule="tcp.srcport = 443" order=173 alert=alert type=application

name="Possible Timed Out DNS Traffic" rule="udp.srcport = 53" order=174 alert=alert type=application

Once that is set, start capturing and validate that you can see meta being generated on the Concentrator.

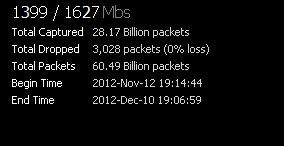

Once you validate operation, now its time to validate the line speed at the tap that the decoder is seeing. First, check the decoder for line rate and any dropped packets.

This image shows a high line rate and negligible packet loss over a 30-day period. So this decoder is not dropping any packets.

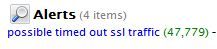

The next step is to see if you have a high rate of timed out traffic generated on this decoder as a result of the custom application rules installed as mentioned above. In this customer's case, they were a high rate of timeouts.

What this suggested to me is that a firewall somewhere along the path of communication was likely overloaded. You see, on any firewall, there are only 64,000 available virtual ports to assign to dynamic outbound traffic from an infrastructure. In busier infrastructures, those firewalls have to tear down older virtual port connections to make room for newer outbound connections. The busier the network, the faster these teardowns happen. And when webservers respond to older connection requests, sometimes those older virtual ports are no longer available, and the connections get dropped by the firewall. In NetWitness, this traffic typically looks like sourceport 443 traffic, and it gets dumped into service type OTHER. Identifying this type of timed out traffic helps to understand servicetype OTHER, and in this case, was helpful in understanding that this particular segment did have some oversubscription problems, at least with the firewall at this point. But it still does not validate the TAP itself.

Understanding that this segment was under stress, the final validation to check for packet loss at the tap's side is actually very quick and easy to do. Simply look at JPEG files to see if they get reconstructed correctly in Investigator. JPEGs are files with layered pixels. If packet loss occurs, you get JPEG images that look like some of these layers have been stripped away.

This image is an example of a conference room photograph that had layers stripped from it due to packet loss. And since the decoder was not detecting any packetloss at its packet engine, the loss must be taking place at the tapping point. If the decoder was dropping packets at its engine, JPEGs would also appear corrupted.

The important lesson is this:

Validate your tapping traffic as soon as possible after deploying gear to a new location. This decoder had been in place for almost a month and it appeared that the tap was shedding packets for a while. And when you share infrastructure at a tap that is dropping packets, each appliance on that tap will miss traffic. The IDS might not alert. FireEye will miss packets. And NetWitness meta might not get created correctly.