- NetWitness Community

- Discussions

- Re: Purging Decoder Packet Database

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-20 03:01 PM

Hi All,

We have a requirement to purge the packet data once processed. We don't want to keep the raw packet data even for a second. Not sure adding the below schedule task will suffice the requirement. Please help?

addIter minutes=20 pathname=/database msg=timeRoll params="type=packet days=0"

Thanks,Dipin

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-24 03:48 PM

Hello Dipin,

You could create an application rule on the packet decoder that matches all the traffic for which you don't want to store the packets for (such as "did exists" to match all traffic) and then enable "Stop Rule Processing" and chose "Truncate" from the options.

"Truncate" tells the decoder to only keep the headers and meta, and drop the actual payload without storing it whenever the rule is matched.

If you do that, make sure the application rule is always the last one in the list.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-21 03:51 AM

Hi

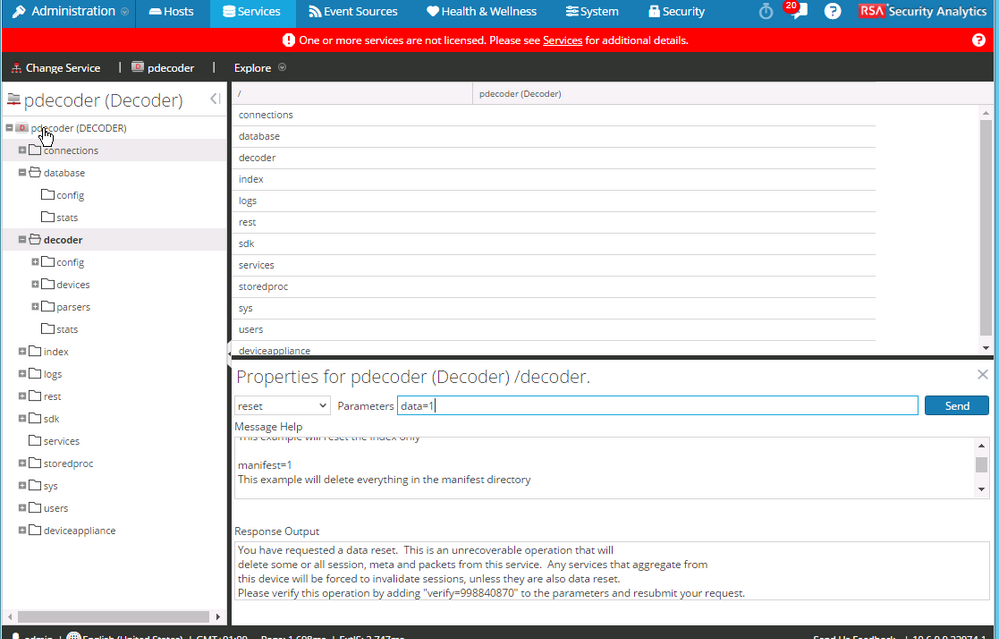

On the SA GUI go to Services and then the Service you wish to remove data from. (For example the packet decoder)

Then go to Explore View, chose the service (eg decoder)

Then Use the reset command. The online help is pasted below.

Use reset data=1 index=1 as you parameter to delete the data and the logs.

Reset data, index, manifests, stats, configuration, or logs for this service. Data automatically deletes index and stats, unless filesCreatedAfter is specified. Service is automatically restarted.

Example arguments:

data=1 config=1 log=1

This example will reset data, index, logs, and configuration

index=1

This example will reset the index only

manifest=1

This example will delete everything in the manifest directory

filesCreatedAfter="2015-12-01 14:00:00"

This example will delete all session, meta and packet files created on or after Dec 1st, 2015 2pm (UTC) from the service. All other files will remain. The index will not be touched, but upon restart will be truncated to match the last session in the session database.

security.roles: decoder.manage

parameters:

data - <bool, optional> Reset data, automatically resets index (may not be applicable to all services)

index - <bool, optional> Reset index, only valid if data is not reset (may not be applicable to all services)

manifest - <bool, optional> Reset all manifests stored in the long term manifest directory (may not be applicable to all services)

config - <bool, optional> Reset configuration settings to default

stats - <bool, optional> Reset the stats database

log - <bool, optional> Reset the log database

filesCreatedAfter - <date-time, optional> Delete all database files (session, meta, packets) created after a certain date. The index will automatically get rolled back on restart. Not valid with option index.

cl - <string, optional> A (cl="name=value name2=value2") set of options for setting the command line on the next restart

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-21 03:54 AM

Sorry I just read your message again. I think what you want to do is remove the data after it is processed.. Can you clarify why you don't want to keep the data after it is processed?

If the data is sensitive then maybe making use of the data privacy options within Security Analytics might be more appropriate. For example, you can replace sensitive data with a hash rather than the display it.

More information on this is available here

Data Privacy Management - RSA Security Analytics Documentation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-21 09:41 AM

Hi David

It's based on the policy we have.I just want to know is it possible to not to store the raw packet after meta creation? If yes, how?

Thanks,Dipin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-21 10:09 AM

Hi,

The packets will be stored, but as you say you can write a scheduled task to delete them. The one given above should work.

I looked at the timeroll parameters and it has the following syntax:

Delete database files that exceed a given age

security.roles: database.manage

parameters:

type - <string, {enum-any:session|meta|packet}> The database files to remove

timeCalc - <string, optional, {enum-one:current|last-write}> The time calculation to use (current date or last write date), default is current

minutes - <uint32, optional, {range:0 to 10000}> Remove database files older than the given number of minutes

hours - <uint32, optional, {range:0 to 10000}> Remove database files older than the given number of hours

days - <uint32, optional, {range:0 to 10000}> Remove database files older than the given number of days

date - <string, optional> Remove database files older than the given UTC date (YYYY-MM-DD HH:MM:SS), not compatible with minutes, hours, or days parameters

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-24 03:48 PM

Hello Dipin,

You could create an application rule on the packet decoder that matches all the traffic for which you don't want to store the packets for (such as "did exists" to match all traffic) and then enable "Stop Rule Processing" and chose "Truncate" from the options.

"Truncate" tells the decoder to only keep the headers and meta, and drop the actual payload without storing it whenever the rule is matched.

If you do that, make sure the application rule is always the last one in the list.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-25 11:02 AM

Halim,

I'm pretty sure DID is added by the concentrator, not as processed meta by the decoder so that field would never even exist as far as the decoder is concerned. I could be wrong on that though. However your application rule is the right approach. I would add an application rule that is the VERY LAST RULE to truncate all traffic. The best way to do this would be...

Rule Name: Truncate All Payload

where: message=32 || mesage=1

Stop rule processing, truncate. The stop rule processing is required to truncate and it is important to run at the end so all your application rules run first since they run in order.

message =32 is logs

message=1 is packets

No need for both if you are only doing one of the two.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-25 02:59 PM

Hi Halim and Michael,

Thanks for the solution.

As per my knowledge we have control over the flow on these points BPF > Network Rules > App Rules > Correlation Rules.

Why we are doing this in App Rules ? Is it not possible in Network Rules or BPF?

Or is it because the meta extraction is happening after Network Rules?

Please help me understand?

Thanks

Dipin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-04-25 05:14 PM

Data is processed in the following order

BPF->Network Rules->Parsers->Feeds->Application Rules

If you drop the traffic at BPF or Network rules no parsers, feeds or app rules will be evaluated against that data. You can't drop at Parsers or Feeds and by making it the last app rule you ensure all other app rules are evaluated before the payload is dropped since truncating requires rule processing to be stopped.

May I inquire why you are dropping all the payload? What is your use case?