- NetWitness Community

- Discussions

- Writing Parsers - Part 2: What does a parser see?

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Writing Parsers - Part 2: What does a parser see?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2012-10-18 10:00 AM

This is the second in a series on writing your own parsers. The previous installments can be found at:

Part 2: What does a parser see?

When a "session" is run through a parser, what exactly is it that is being run through the parser?

Your intuition probably leads you to imagine that it is the exact same stream of bytes that you see on the wire, in the exact same order.

But that's not quite true. A parser isn't going to see the exact same bytes that are on the wire, nor in the same order.

To understand why, imagine an HTTP request captured while crossing a network segment with an MTU of 68. What does that look like frame by frame?

Ethernet header 14 bytes

IP header 20 bytes

TCP header 32 bytes (SYN)

Ethernet header 14 bytes

IP header 20 bytes

TCP header 32 bytes (SYNACK)

Ethernet header 14 bytes

IP header 20 bytes

TCP header 20 bytes (ACK)

Ethernet footer 6 bytes

Ethernet header 14 bytes

IP header 20 bytes

TCP header 20 bytes

GET /hello/wo 14 bytes

Ethernet header 14 bytes

IP header 20 bytes

TCP header 20 bytes (ACK)

Ethernet footer 6 bytes

Ethernet header 14 bytes

IP header 20 bytes

TCP header 20 bytes

rld?foo=bar H 14 bytes

Ethernet header 14 bytes

IP header 20 bytes

TCP header 20 bytes (ACK)

Ethernet footer 6 bytes

Ethernet header 14 bytes

IP header 20 bytes

TCP header 20 bytes

TTP/1.1CLHOST 14 bytes (C = Carriage Return 0x0D, L = Line Feed 0x0A)

And so on...

If you think an example with an MTU of 68 is silly, consider a full set of mail headers and a standard MTU of 1500. Do they fit into a single frame? Usually not.

How do you extract the filename from that? Half of it is in frame 4, half is in frame 6, with several bytes of headers, footers, and an ACK in between.

Instead, sessions are processed before they are sent through the parsers. During this process, known as "sessionization" or "session reconstruction":

(0) Network meta is registered - such as IP source and destination, port numbers, etc.

(1) Each frame/packet is stripped of all headers and footers, leaving only the data portion (payload)

(2) All the payloads of the packets sent from the client to the server are concatenated (known as the "request stream")

(3) All the payloads of the packets sent from the server to the client are concatenated (known as the "response stream")

(4) The request stream and response stream are concatenated, request stream then response stream.

The final result of step 4 is the "session" - this is what is presented to the parsers.

So while the actual order of a network session is:

request packet

response packet

request packet

response packet

request packet

response packet

What a parser "sees" is actually:

request payload

request payload

request payload

response payload

response payload

response payload

As a practical example, consider an HTTP session consisting of a request for an html page and its images:

GET /pageone.html HTTP/1.0

HTTP/1.0 200 OK

<html> ...

GET /image1.jpg HTTP/1.0

HTTP/1.0 200 OK

...

GET /image2.jpg HTTP/1.0

HTTP/1.0 200 OK

...

What a parser will see is:

GET /pageone.html HTTP/1.0

GET /image1.jpg HTTP/1.0

GET /image2.jpg HTTP/1.0

HTTP/1.0 200 OK

<html> ...

HTTP/1.0 200 OK

...

HTTP/1.0 200 OK

...

This may seem counter-intuitive, and in some ways it does create challenges. But it still makes parser writing easier than if you had to be concerned with packet boundaries, bouncing between streams, skipping ACKs, etc.

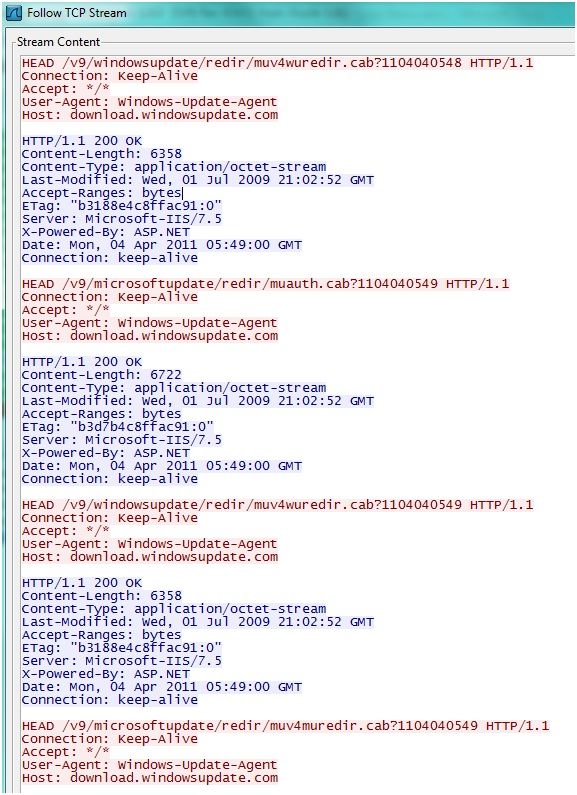

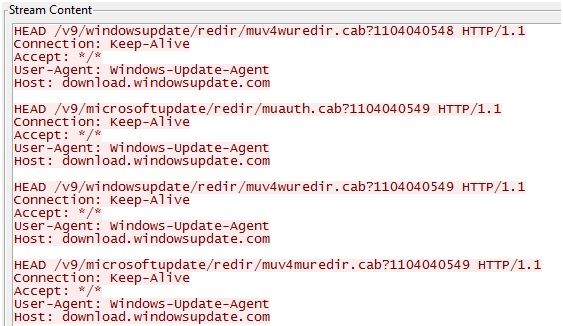

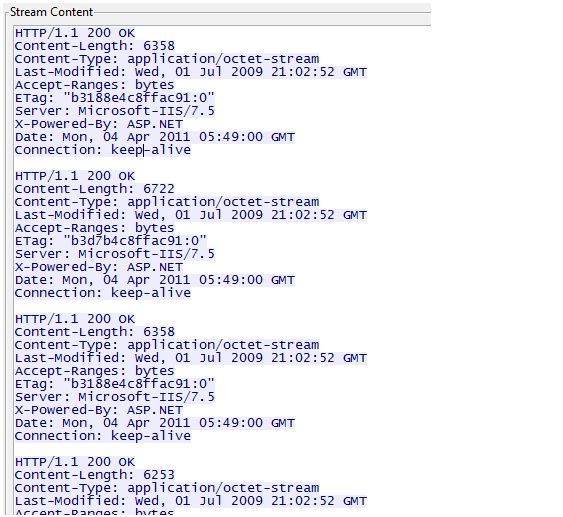

If you're having trouble imagining this, here's some illustrations. If you've ever used the "Follow TCP Stream" capability of Wireshark, you've already seen what this looks like.

(1) Each frame/packet is stripped of all headers and footers, leaving only the data portion (payload)

(2) All the payloads of the packets sent from the client to the server are concatenated (known as the "request stream")

REQUEST STREAM

(3) All the payloads of the packets sent from the server to the client are concatenated (known as the "response stream")

RESPONSE STREAM

(4) The request stream and response stream are concatenated, request stream then response stream.

SESSION

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2013-12-19 09:51 AM

is there any part 3?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2013-12-19 04:16 PM

Not yet, and I apologize for that (considering part 2 was over a year ago). Hopefully I'll be able to get a part 3 up in January.