This website uses cookies. By clicking Accept, you consent to the use of cookies. Click Here to learn more about how we use cookies.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

NetWitness Knowledge Base Archive

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

- NetWitness Community

- NetWitness Knowledge Base Archive

- How to add new RSA Security Analytics Warehouse (SAW) node into existing SAW cluster

-

Options

- Subscribe to RSS Feed

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

How to add new RSA Security Analytics Warehouse (SAW) node into existing SAW cluster

Article Number

000032552

Applies To

RSA Product Set: Security Analytics

RSA Product/Service Type: Security Analytics Warehouse

RSA Version/Condition: 10.3.x, 10.4.x, 10.5.x

Platform: CentOS

O/S Version: 5, 6

RSA Product/Service Type: Security Analytics Warehouse

RSA Version/Condition: 10.3.x, 10.4.x, 10.5.x

Platform: CentOS

O/S Version: 5, 6

Issue

This article describes how to add a SAW node into SAW cluster. There are two scenarios where this may be required:

- When a new SAW node is need to be added into SAW cluster

- When a existing SAW node is replaced (RMA'd) with new node, RMA SAW node need to be added into SAW cluster.

Task

Checklist for the node being added:

1. Verify IP address and Subnet of the new SAW node. The new SAW node has to be reached to all existing SAW nodes when they are pinged from new SAW node.

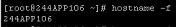

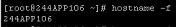

2. Make sure “hostname –f” returns Fully Qualified domain name. By configuring host name in below files.

/etc/sysconfig/network

Image description

Image description

/etc/hosts

Image description

Image description

Post above configuration, “hostname –f” output will be as below in new node.

Image description

Image description

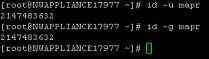

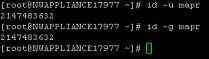

3. UID,GID of “mapr” should be 2147483632. Please use “id –u mapr” for UID and “id –g mapr” for GID of new appliance.

Image description

Image description

4. Verify the mounts “/opt” and “tmp” has defaults ON in /etc/fstab file.

Locate the mount point entries for /opt and /tmp in /etc/fstab. The following is the example of the entries for /opt and /tmp:

5. Cleanup old data from the image.

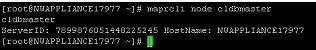

1. List of CLDB Nodes in SAW cluster:

Image description

Image description

Image description

Image description

2. List of ZOOKEEPER Nodes in SAW cluster:

Image description

Image description

3. Cluster name already found in step 1.

4. List of disks to be added in the node: use “lsblk” command for unmounted/unformatted disks for cluster configuration.

Image description

Image description

6. Prepare a file “/root/mydisks” in new SAW node with list of disks to be added in HDFS with one disk per line as below.

1. Verify IP address and Subnet of the new SAW node. The new SAW node has to be reached to all existing SAW nodes when they are pinged from new SAW node.

2. Make sure “hostname –f” returns Fully Qualified domain name. By configuring host name in below files.

/etc/sysconfig/network

Image description

Image description/etc/hosts

Image description

Image descriptionPost above configuration, “hostname –f” output will be as below in new node.

Image description

Image description3. UID,GID of “mapr” should be 2147483632. Please use “id –u mapr” for UID and “id –g mapr” for GID of new appliance.

Image description

Image descriptionIf new node has different UID,GID, Please use below commands to configure correctly.

usermod -u <NEWUID> <LOGIN>

groupmod -g <NEWGID> <GROUP>

find / -user <OLDUID> -exec chown -h <NEWUID> {} \;

find / -group <OLDGID> -exec chgrp -h <NEWGID> {} \;

usermod -g <NEWGID> <LOGIN>

groupmod -g <NEWGID> <GROUP>

find / -user <OLDUID> -exec chown -h <NEWUID> {} \;

find / -group <OLDGID> -exec chgrp -h <NEWGID> {} \;

usermod -g <NEWGID> <LOGIN>

Replace <NEWUID>=2147483632,<NEWGID>=2147483632, <LOGIN>=mapr, <OLDUID>=501 (New ISO image may have this value), <OLDGID>=501 (New ISO image may have this value) with these values in below commands.

4. Verify the mounts “/opt” and “tmp” has defaults ON in /etc/fstab file.

Locate the mount point entries for /opt and /tmp in /etc/fstab. The following is the example of the entries for /opt and /tmp:

/dev/mapper/VolGroup01-optlv /opt ext4 nosuid 1 2

/dev/mapper/VolGroup01-tmplv /tmp ext4 nosuid 1 2

Change nosuid to suid as below in /etc/fstab file.

/dev/mapper/VolGroup01-optlv /opt ext4 suid 1 2

/dev/mapper/VolGroup01-tmplv /tmp ext4 suid 1 2

/dev/mapper/VolGroup01-optlv /opt ext4 suid 1 2

/dev/mapper/VolGroup01-tmplv /tmp ext4 suid 1 2

5. Cleanup old data from the image.

- ZK data remove using rm –rf /opt/mapr/zkdata/*

- Host ID using rm –f /opt/mapr/hostid

Information Needed to add new node into SAW cluster:

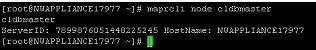

- Login to ssh of existing SAW node and use “maprcli node cldbmaster” command to find cldb master node.

Image description

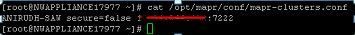

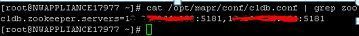

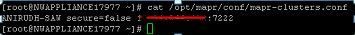

Image description- Login to CLDB master node and use “cat /opt/mapr/conf/mapr-clusters.conf” to get details.

Image description

Image description- Analyzing above output is as <Cluster_Name> secure=false <cldb_node1>:7222

2. List of ZOOKEEPER Nodes in SAW cluster:

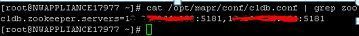

- Login to CLDB master node and use “cat /opt/mapr/conf/cldb.conf|grep zoo” to get zookeeper node list.

Image description

Image description3. Cluster name already found in step 1.

4. List of disks to be added in the node: use “lsblk” command for unmounted/unformatted disks for cluster configuration.

Image description

Image description- Above output shows sda has partitions. So, sdb is available to add in cluster.

5. rpm packages need to be installed:

mapr-fileserver

mapr-nfsserver

mapr-tasktracker

Mapr-jobtracker(optional)

mapr-cldb (Only if this new node is CLDB node)

mapr-nfsserver

mapr-tasktracker

Mapr-jobtracker(optional)

mapr-cldb (Only if this new node is CLDB node)

6. Prepare a file “/root/mydisks” in new SAW node with list of disks to be added in HDFS with one disk per line as below.

/dev/sdb

/dev/sdc

/dev/sdd

..

/dev/sdy

/dev/sdc

/dev/sdd

..

/dev/sdy

Resolution

Please follow below steps to add this into SAW cluster.

1. Run below command in new SAW node.

Below is the sample output for above command.

Image description

Image description

2. Verify new node is configured properly or not using “ Hadoop fs -ls /”.

Image description

Image description

1. Run below command in new SAW node.

opt/mapr/server/configure.sh –c <CLDB_NODES> -z <ZOOKEEPER_NODES> -N <CLUSTER_NAME> -F /root/mydisks –create-user

Below is the sample output for above command.

Image description

Image description2. Verify new node is configured properly or not using “ Hadoop fs -ls /”.

Image description

Image descriptionTags (27)

- 10.4

- 10.4.x

- 10.5

- 10.5.x

- 10.x

- Customer Support Article

- Data Warehouse

- KB Article

- Knowledge Article

- Knowledge Base

- NetWitness

- NetWitness Platform

- NW

- RSA NetWitness

- RSA NetWitness Platform

- RSA Security Analytics

- SAW

- Security Analytics

- Security Analytics Warehouse

- SIEM

- Version 10

- Version 10.4

- Version 10.4.x

- Version 10.5

- Version 10.5.x

- Version 10.x

- Warehouse

No ratings

In this article

Related Content

© 2022 RSA Security LLC or its affiliates. All rights reserved.