- NetWitness Community

- Discussions

- Re: Exporting and Re-Injecting Logs and Maintain Original Date/Time

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Exporting and Re-Injecting Logs and Maintain Original Date/Time

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2018-09-12 12:34 PM

There are times when you would like to export data from a log decoder and then re inject it into a new log decoder.

Typically you would do this through the investigator interface, save the file and then upload this file into the new log decoder. The problem with this is that the original date and times are lost, as a log decoder stamps the time at the point of injest or import.

There was a change made to the log decoder code for other reasons that we leveraged to help our use case.

Collection time stamping has always been done at the Decoder, however a change was made to allow the VLC to time stamp it instead.. We are going to use that capability for our use case.

The process is fairly simple and is as follows:

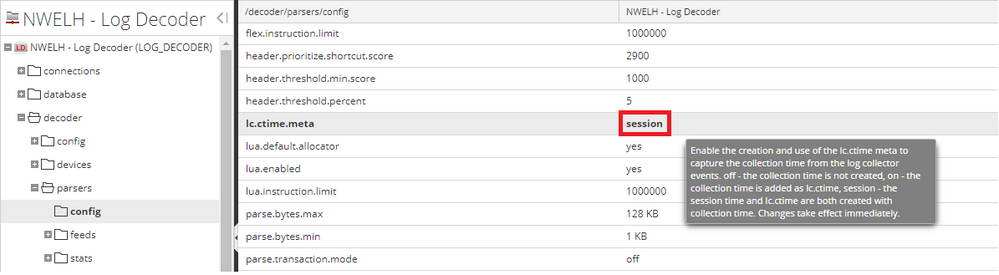

Enable the VLC time stamping in the Log Decoder:

Log Decoder->Explore-> decoder->parsers->config change lc.lctime.meta to "session"

This will tell the decoder to use the VLC timestamp as the authoritative time stamp

The next task is to export the data from the log decoder that houses the data.

The only filtering capabilities that are possible are start and end time. You can not filter on meta values.

For example I would like to export all logs today between 2 and 3 pm No problem, I would like to export all logs today between 2 and 3 pm where the device type = Palo Alto ... This is NOT POSSIBLE

The process is in 2 parts

First we need to extract and transform the syslog header data

Second we need to inject the data.. This is done using Syslog-NG, Rsyslog or NXLog or NWLogplayer.

The following shows the process and a sample of the raw log:

Sep 10 10:14:49 XTM_2_Series (2018-09-13T14:14:49) firewall: msg_id="3000-0148" Allow 1-Trusted 0-External 68 udp 20 63 192.168.30.211 8.8.8.8 44505 53 (dhcp machines-00)

After extraction and transformation using the script the log now looks like

[][][192.168.30.2][1536762299000000][] Sep 10 10:14:49 XTM_2_Series (2018-09-13T14:14:49) firewall: msg_id="3000-0148" Allow 1-Trusted 0-External 68 udp 20 63 192.168.30.211 8.8.8.8 44505 53 (dhcp machines-00)

The log message being sent in CANNOT have a priority field preceding the message, if it does then the message is treated differently and the bold header is ignored.

The following log file would be processed as normal, the original date time would be lost and the bold header would become part of the log file and would not parse.

<10> [][][192.168.30.2][1536762299000000][] Sep 10 10:14:49 XTM_2_Series (2018-09-13T14:14:49) firewall: msg_id="3000-0148" Allow 1-Trusted 0-External 68 udp 20 63 192.168.30.211 8.8.8.8 44505 53 (dhcp machines-00)

A breakdown of the header is as follows:

First [] and second [] are blanks

Third [] is the ip address or hostname of the original sending system

Fourth [] is the originally collected time stamp in unix time INCLUDING the microseconds

Fifth [] is blank

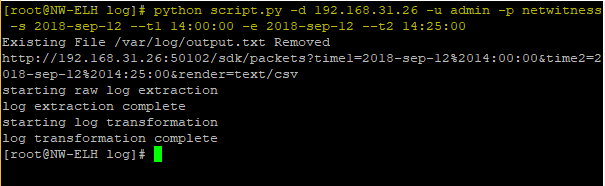

On any linux box (or the log decoder) you need to copy the script.py file to the /var/log directory. Technically you could run this script anywhere but for simplicity we shall copy it to /var/log

Then you run the script as follows

2. Run script ->

python script.py -d 192.168.1.112 -u admin -p netwitness -s 2018-Apr-19 --t1 11:00:00 -e 2018-Apr-30 --t2 11:00:00

-d is the log decoder from which you want to pull the data from

-s is the start date of the logs you want to extract

--t1 is the start time of the logs you want to extract

-e is the end date of the logs you want to extract

--t2 is the end time of the logs you want to extract

This will generate two files:

Extract.txt -- Raw log export

Output.txt --Modified header appended to raw

Extract.log can be deleted as it will not be used

The output.txt is the file that need to be read and syslog streamed to the Log Decoder# that had the lc.lctime.meta value changed to "session"

You can use Syslog-NG, Rsyslog, NXLog or NWLogplayer to stream the data in. However it is MANDATORY that the contents/lines not be modified in anyway.

Please note that you can NOT use the Rsyslog server on any of the Netwitness Components, this must be used on a stand alone system.

In the Rsyslog conf file below we can see the template being used->

____________________________

## This section monitors the log file /var/log/output.txt

$InputFileName /var/log/output.txt

$InputFileTag MYAPP

$InputFileStateFile stat-MYAPP

$InputFileSeverity debug

$InputFileFacility local0

$InputFilePersistStateInterval 20000

$InputRunFileMonitor

$template NWReplay,"%rawmsg%\n"

#if $programname == 'MYAPP'

#then @@192.168.31.26:514;NWReplay

@@192.168.31.26:514;NWReplay

& ~

____________________________

This conf file directs Rsyslog to read the file and stream the contents to NWLD over a TCP syslog connection

If we wanted to do the same using NXlog on Windows (providing we had the file in the 'C:\logs' directory) we would define the conf file similarly

_______________________

<Input in>

Module im_file

File 'C:\logs\output.txt'

ReadFromLast False

SavePos False

PollInterval 1

Exec $Message = $raw_event; $SyslogFacilityValue = 22;

</Input>

<Output out1>

Module om_tcp

Host 192.168.26.514

Port 514

_______________________

NWLogplayer can be used with the switch of “-r 1”

Once the data has been read and injected,it will be available in investigator in the original dates and time.

Hope that helps

Dave

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2019-01-31 02:55 AM

Hi Dave,

I am thinking of following this approach when migrating saw nodes to Archiver in 11.x deployment.

do you think there is a way to export avro to raw logs while preserving time stamps, in order to inject them again to saw nodes.

What do you suggest for preserving the avro data and their timestamps?

Thanks alot.

Akram