- NetWitness Community

- Discussions

- Re: Interesting DNS Tunneling Content

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Interesting DNS Tunneling Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2017-05-03 05:08 PM

The Domain Name Service (DNS) allows machines to convert human readable domain names like google.com into their machine addresses.

In this post, I talk about how DNS tunneling works and present some content that I put together to aid analysts in detecting DNS tunneling in their environment.

DNS TUNNELING

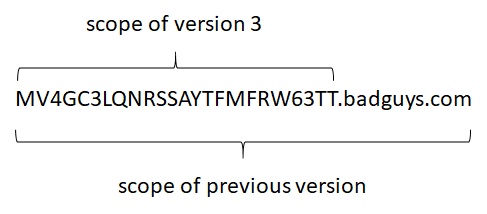

An attacker can abuse DNS to send data between infected machines in the network and their Command and Control (C2) server. The process begins when the attacker is authoratative for a domain. Let's say they own badguys.com. In order to send data from the infected machine they simply encode the data into the subdomains. For example their hello message could be: hello.badguys.com. Of course sending OS-WinXP-User-Steve-Perm-Admin.badguys.com is going to raise suspicion as well as being an inefficient method of sending data. So, attackers may convert to hex or base32 encode data. Then the subdomain looks something like: MV4GC3LQNRSSAYTFMFRW63TT.badguys.com.

To identify this type of DNS tunnel, the analyst looks for a large number of random looking subdomains for the root domain when the C2 is actively communicating. When the infected machine is simply beaconing, the analyst expects a single (or few) subdomains requested frequently from the same infected machine.

CONTENT DESCRIPTION

To aid the analyst in detecting DNS tunneling, this content looks at the length of the query name field and computes it entropy. Most DNS names shouldn't be very long, and shouldn't be very random. Entropy is a mathematical way for us to express 'randomness'. A value of 8 would be perfectly random for this scenario.

We use a parser to extract the alias.host length and entropy, and then compute the average in a NWDB report. The parser requires the addition of the following lines to the index-concentrator-custom.xml and index-decoder-custom.xml files as appropriate for the environment:

<key description="Hostname Length" format="UInt32" level="IndexValues" name="alias.host.len" valueMax="1000000" defaultAction="Auto"/>

<key description="Hostname Entropy" format="Float32" level="IndexValues" name="alias.host.ent" valueMax="1000000" defaultAction="Auto"/>

<key description="Root Domain" format="Text" level="IndexValues" name="alias.host.root" valueMax="100000" defaultAction="Auto"/>

KNOWN COMPLICATIONS

1. Security tools- security tools like AV will often use DNS tunneling to submit hashes to their servers and get additional data. This leads to a large number of randomly looking subdomains and can be filtered with the whitelist provided as part of the report. This is technically an example of DNS tunneling, it is just tunneling by the good guys.

2. DNS Servers- DNS servers make it look like a lot of queries are coming from a single host, but you cannot be sure that in the case since they are effectively proxying the queries. We cannot whitelist those servers, and simply have to pivot to logs if something seems interesting.

3. Confusing calculations- let's pretend that the attacker sent a request for google.com to check network connectivity and beacon.badguys.com in the same session. The parser will calculate a separate entropy and length for each alias.host. However, when the report runs, it loses that context and calculates the same average entropy for both based on that session. This means our averages will not be quite accurate. In a large environment though (think law of large numbers), there ought to be enough queries for google.com as part of other sessions to mitigate this issue. Using DNS logs instead of packet data fixes this issue.

4. The parser adds additional meta based on populating alias.host regardless if the protocol is DNS or not. This allows it to run against logs. It also allows me to use some of that data in HTTP reports in the future.

FUTURE WORK

I request feedback on the rules and parser especially in environments with DNS logs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2017-05-08 06:57 PM

I updated the parser to support country code top level domains and moved the functions to local scope.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2017-05-24 11:45 AM

After seeing this in some environments, I made an alteration. I had included the root domain in the entropy and length calculations thinking that malicious root domains might be more random or longer and separate valid DNS and tunnels in the report. I was 100% wrong. By focusing on the subdomains, I got a larger separation between legitimate DNS and tunnels. The following image may help explain.

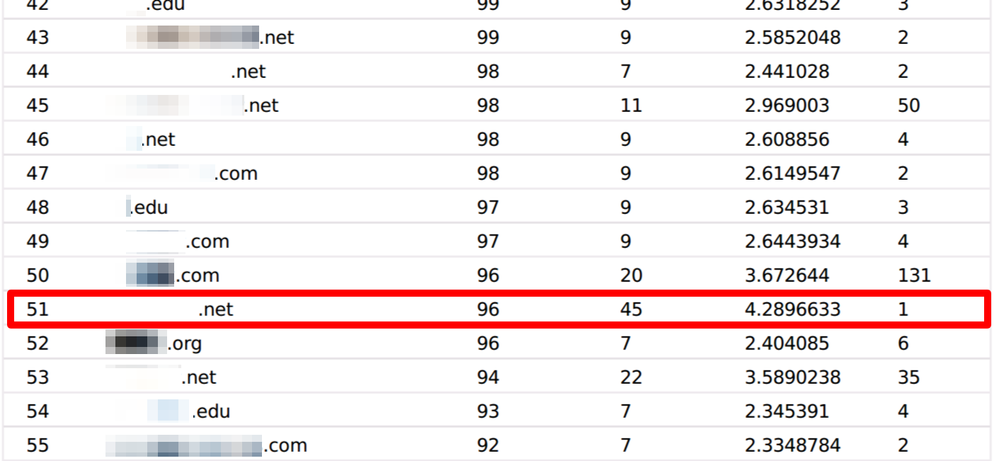

I am uploading a new version of the parser to assist in tunnel detection. Below you will find an actual example of a DNS tunnel.

The domain on row 51 is our tunnel. You can easily see the entropy is much higher than the other domains and the length much longer. It is also coming from a single machine. This makes discovering the tunnels really easy using the report.

Hopefully you find the updated parser valuable.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2017-06-05 11:38 AM

I updated the report format to add other meta of interest relevant for each domain.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2019-01-07 03:05 PM

I'm deploying this to my UAT and DR environments as I type this! I'm excited, thank you for doing this leg-work Matt.

I'll provide feedback if I have any or have questions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2019-01-10 09:20 AM

I would add some sort of exception or separate workflow for IDN domains (cyrillic, arabic, chinese etc.), basically that a bit differently anything what starts with xn-- as this sign of IDNA encoding

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2019-01-15 04:38 AM

I have only DNS logs (Windows DNS), so I deployed the LUA parser on the Log Decoder and created the extra meta keys ( alias.host.ent, alias.host, alias.host.len) both in the index-concentrator-custom.xml and index-decoder-custom.xml.

Unfortunately the extra meta keys do not get values. The domain names haves parsed into the cp.info meta key in the following format eg.:(3)udc(3)msn(3)com(0); (3)www(6)google(3)com(0)

Can you help what could be the problem?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2019-01-15 02:42 PM

It is excellent that you have DNS logs. Using logs can be much better than packets for this use case because you get an accurate number of source IPs for each requested domain. So, assuming that DNS is only allows out of your firewall from the DNS server and you have those logs, you actually have better coverage than with packets.

Unfortunately, I didn't test this with any DNS logs since I didn't have access to any logs. It looks like the problem you are having is that your DNS logs populate the hostname into the cp.info meta key. The parser will only run on a meta callback for alias.host. So, only when alias.host is populated does the parser even get a chance to run. But even if the parser ran, it is expecting the format: abcd.something.com. So, (3)www(6)google(3)com(0) wouldn't parse correctly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2019-01-15 02:54 PM

Hey Volodymyr,

You make a good point about IDNA encoded domains. I wasn't thinking of those. It would be interesting to test with some of those encoded domains to see how it affects the results. My guess is that since everything is still being encoded to ASCII characters, the entropy calculation is still valid but because of the xn-- prefix you could actually get a lower entropy value for the IDNA encoded domains. So, I don't think we would need to modify the entropy approach for those domains. But the length of the domains would be inflated.

In summary, I don't think an exception would be warranted, but the average subdomain length for IDNA encoded domains would be larger than is accurate and might lead to some innocuous domains looking more suspicious.