- NetWitness Community

- Discussions

- Re-indexing after 10.3.1 upgrade...

-

Options

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-02-05 09:54 AM

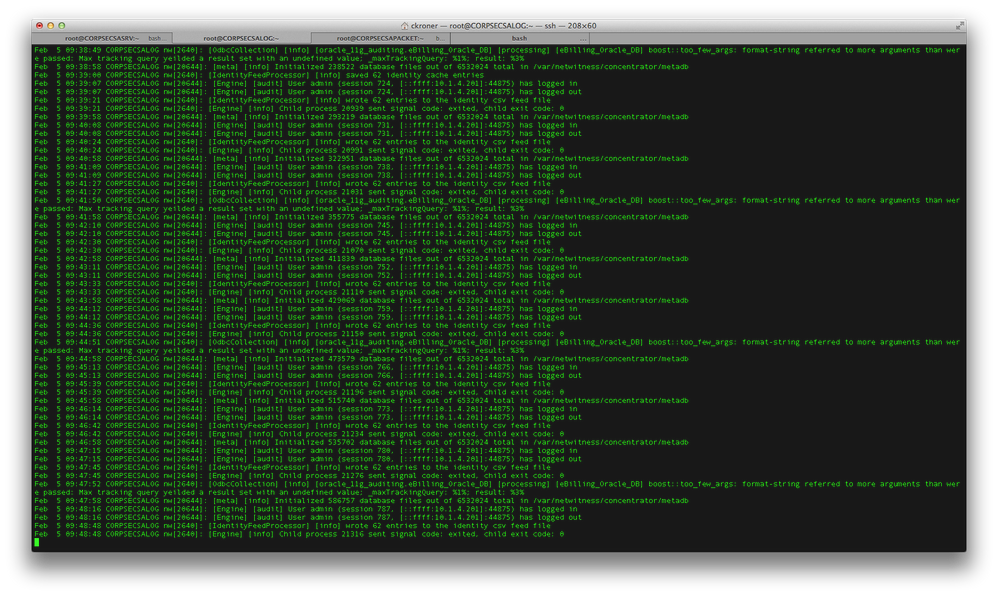

So just recently, we upgraded to 10.3.1. Unaware of a new indexing process from here on after the upgrade, we noticed our concentrators did not come back up immediately. We have a log hybrid and a packet hybrid, both with 22TB DACs attached and both up in the 90% full range and already rolling off data. This being said...our packet hybrid came back up after a couple of hours, the log hybrid did not and wasnt started the next day. I started the concentrator service...a message I continue to see is "[meta] [info] Initialized *400000+ files* database files out of 6532024 total in /var/netwitness/concentrator/metadb". Does the service remain unavailable until these databases are initialized? I want to do a ticket on this but something tells me that until this is completed, the service is unavailable. Can someone verify this for me?

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-02-18 02:09 PM

We actually figured it out, I meant to update this post. The re-indexing was triggered by the 10.3.1 update. The customer had already been running data through the devices and their storage was already rolling off data. Due to the new process of indexing data, post-install tasks include a re-indexing of the device...thus, a bug in this causes the storage to max out.

FIX: none, allow the device to re-index (may take hours or even days depending on the storage set up)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-02-06 02:05 AM

Yes this is true coming from a major update as it updates the indexing schema. If you have enough space in your system you will not loose logs, there will be just a huge backlog. Ours took 4-5 days to come up.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-02-06 03:48 PM

Something is wrong here. Your problem isn't the index, it's the fact that you have 6+ million metadb files on that meta volume. That can only occur if there is a misconfiguration.

Please double check the configuration under /database/config/meta.dir and make sure the size is appropriate for the volume size. It should be set to 95% of the total volume space.

Also check for any core files under /var/netwitness/concentrator/metadb

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-02-18 02:09 PM

We actually figured it out, I meant to update this post. The re-indexing was triggered by the 10.3.1 update. The customer had already been running data through the devices and their storage was already rolling off data. Due to the new process of indexing data, post-install tasks include a re-indexing of the device...thus, a bug in this causes the storage to max out.

FIX: none, allow the device to re-index (may take hours or even days depending on the storage set up)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-04-05 12:10 PM

so to upgrade, we need to consider the time to reindex? looks like one weekend not enough for huge data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-04-05 12:19 PM

This is incorrect information. Upgrading to 10.3 from a prior version does *not* require a reindex.

There is an automatic conversion, which can take at most 2 or 3 hours on a concentrator (depending on the size of the page file on the index volume) and a few minutes on a decoder. No conversion is necessary for a broker.

This is a one time process. Further upgrades (new 10.3 services packs or even 10.4 when it's released) will not require any further conversions.

Scott

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-04-05 08:29 PM

Sorry, it's conversion, but why someone said it took 4-5 days to come up?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-04-06 09:31 PM

Technically when we look at the logs it shows "indexing session ... .. .." with our massive data it took 4-5 days to normalize including aggregation of backlog.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-04-06 10:42 PM

so that means only after 4-5 days you can use concentrator? how about reporting and alerting?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2014-04-07 08:08 AM

If the process took days, then there was a problem with the conversion. This can happen if the index is misconfigured and/or the index volume had no slack space.

If you can send me the logs from the moment the service was upgraded to the point where it starts the reindex, I'd like to troubleshoot what happened.

Thanks,

Scott